Virtualizing The Canvas

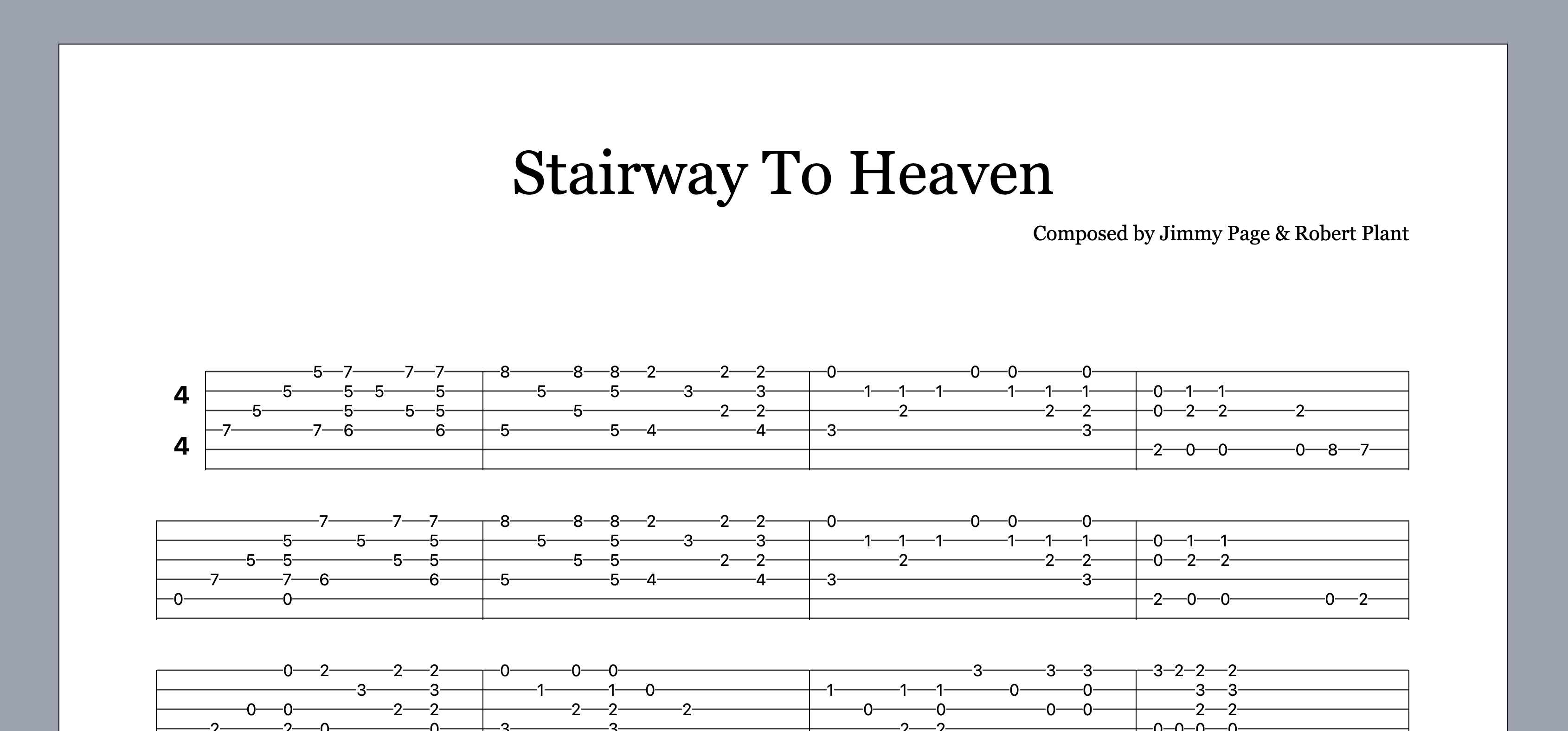

When I first set out building Muzart , I just thought it would be fun to play around with React and flex layouts. It looked a little like this:

Pretty simple looking, eh? One thing I loved about this approach is that the browser managed all of the layout, so I just needed some CSS and no manual layout code.

I quickly found out that performance was abysmal once the tab got

a bit larger, so I moved over to manually laying out the tab as an

SVG document. This worked well for quite some time, but eventually

the virtual tree and heavy re-render cycles of something like a

musical score didn't pan out. This was noticeable when

switching between tracks. Knowing that SVG also starts to degrade

in performance with more and more elements, and knowing that there

would be many more to add, I made the jump to

<canvas> .

The problem with <canvas> is

that you typically have to decide on a buffer size up front. We

could try to size it to fit the entire score, but this has a few

problems:

- We don't know how large the score will be ahead of time, so we can't guarantee they'll fit within the browser / memory limits.

- Changing the dimensions will cause a "flicker", as the canvas is cleared to create the new backing buffer.

- Even if we didn't have limitations, rendering the entire score in advance would have similar performance issues as SVG.

Given these two points, I decided I needed to virtualize the

canvas. By "virtualize", I mean rendering only

what's in a viewport, similar to what

react-virtualized

does for large lists and tables. Let's dive in and learn how

to build one.

📝 Requirements

First, one of the more important parts of any project: let's establish the requirements of what we're trying to build!

-

Able to represent what we're drawing in our own space, so

we don't have to care about pixels and pixel ratios. This

is similar to how SVG and the

viewBoxproperty of the<svg>element interact. - Scrolling and zooming should be handled by the canvas.

- Automatically responds to changes in size and pixel ratios.

- Easy mapping for events with a device position into the user space.

- Should feel as smooth as possible.

And some nice-to-haves, but not strictly necessary:

- Implement a scene graph / object model to simplify clipping and rendering.

- Any helpers on top of the canvas API to simplify drawing.

- Wrapping the canvas context to be able to, for example, render an SVG instead.

Here's where we're headed:

You can scroll around to see the whole canvas. Hold Ctrl (or ⌘ on macOS) while scrolling to zoom in/out. Note that, at the time of writing this article, the experience of zooming in/out quickly followed by a regular scroll continues zooming, something I have to work on!

Now that we know what we want, let's get to building!

🧑🎨 Creating a virtual canvas

So the first two things we need to figure out is how to create a canvas element that can adapt to arbitrary sizes, screen resolutions, device pixel ratios, and scoping the painting to a viewport.

When I first starting building a canvas for I took the naive approach: create a canvas that can fit the entirety of what I need to render and draw everything on it. This worked well until I started using guitar tabs that were quite large. I very quickly found it to be either too slow, or my tab would crash because there isn't enough memory to store the entire canvas.

My second iteration did two things. First, I created a fixed-size

canvas corresponding to the size in the window. Second, since I

wasn't rendering the entire guitar tab, I needed a viewport

that could move around "user space" and map that back to

the canvas. You can think about "user space" like the

unitless coordinate system in an SVG and the viewport would be

equivalent to the

viewBox

property on <svg>.

The key math involved here is mapping between user space and canvas space, which we can package up nicely in a class:

class Viewport {

// Offset for this viewport

x: number;

y: number;

// Size of the viewport

width: number;

height: number;

/** Map a userspace point to the canvas space */

pointToCanvas(pt: Point) {

return new Point(

(pt.x - this.viewport.x) * this.userSpaceCanvasWidthRatio,

(pt.y - this.viewport.y) * this.userSpaceCanvasHeightRatio,

);

}

get userSpaceCanvasWidthRatio() {

return this.canvas.width / this.width;

}

get userSpaceCanvasHeightRatio() {

return this.canvas.height / this.height;

}

}

So when we map points from userspace to canvas we have a ratio

that represents the difference in scale (userSpaceToCanvasWidthRatio

and userSpaceCanvasHeightRatio above)

and an offset for the viewport. A way to express this in words,

assuming the width/height ratios are both 10:5:

Every 10 units of user space has to fit in 5 units in the canvas, after translating the viewport to the canvas origin.

With all of that code in place, we can start drawing things within the viewport:

const draw = (canvas: HTMLCanvasElement) => {

const context = this.canvas.getContext("2d", { willReadFrequently: false });

if (!context) {

return;

}

const factorX = this.userspaceToCanvasFactorX * window.devicePixelRatio;

const factorY = this.userspaceToCanvasFactorY * window.devicePixelRatio;

context.resetTransform();

context.clearRect(0, 0, canvas.width, canvas.height);

context.setTransform(

// Scale x

factorX,

0,

// Scale y

0,

factorY,

// Translation

-factorX * this.viewport.x,

-factorY * this.viewport.y,

);

// This function can draw all the elements in the scene, no translating/scaling needed!

// We can also use the viewport argument here to not draw anything outside of the viewport.

render(context, this.viewport);

};When I look at that code the two questions that come to mind are:

- Why is the translation portion multiplied by the factor?

- Why is the factor negative?

Both questions can be answered by understanding what

setTransform

does, and how it manipulates anything we draw with the context.

The setTransform method sets the

transformation matrix of the context, which is a 3x3 matrix that

determines how a two-dimensional point,

(x, y), is mapped onto the canvas:

If we shorten factorX,

factorY,

viewportX, and

viewportY to

,

,

, and

respectively; what happens when we instead use the values we gave

to setTransform?

Exactly what we had in the implementation for

pointToCanvas above! No need for us to

do math. We'll get

<canvas> to do it all for us 🎉.

🪩 Eliminating flicker

This approach worked really well, but it had one major issue: the canvas would flicker when the size of the canvas element changed, which could happen if the canvas element changes with the window size.

I tried to compensate for this by debouncing the resize, but then the guitar tab would stretch/compress when the window was resizing. Two approaches came to mind:

- Use a swap chain technique, creating a bigger or smaller buffer as needed and swap it in after we've rendered to it.

- Use a fixed-size canvas and manage a secondary "viewport" that would handle the visible portion of the canvas.

After thinking about it, it was clear that (1) was a bad idea. As the user resizes, we'd still want to debounce, otherwise we'd be creating a lot of buffers, which is not a cheap operation. Also, the canvas would still stretch/compress during the time needed to both create and draw to this offscreen buffer.

So I settled on (2). I already had viewports figured out, so it was just a matter of clipping the canvas to its visible portion, which is surprisingly easy when using the resize observer API to keep track of parent element's size:

const parentElementObserver = new ResizeObserver((entries) => {

const borderBox = entries[0].borderBoxSize[0];

setCanvasSize(borderBox.inlineSize, borderBox.blockSize);

});

parentElementObserver.observe(canvas.parentElement);And our canvas element should be the size of the entire screen:

<canvas

ref={setCanvasRef}

width={window.screen.width * window.devicePixelRatio}

height={window.screen.height * window.devicePixelRatio}

{...otherProps}

/>With that, we never have to resize the buffer for the canvas element, pretending it's only as big as the visible portion of its parent element. That does mean there's some wastage, but it's a small price to pay for a smooth experience.

And that's it! We've created a virtual canvas that can (theoretically) render an infinite canvas by shifting a viewport around user space without any flicker.